AI-Powered Blog Categorization Engine Organizes 300K+ News Articles and Cuts Editorial Tagging Effort by 65%

Automated semantic classification across 300K+ articles reduced manual tagging effort by 60–70% and improved content discoverability and editorial turnaround by ~35–45%, based on workflow benchmarks from large-scale NLP deployments in media platforms.

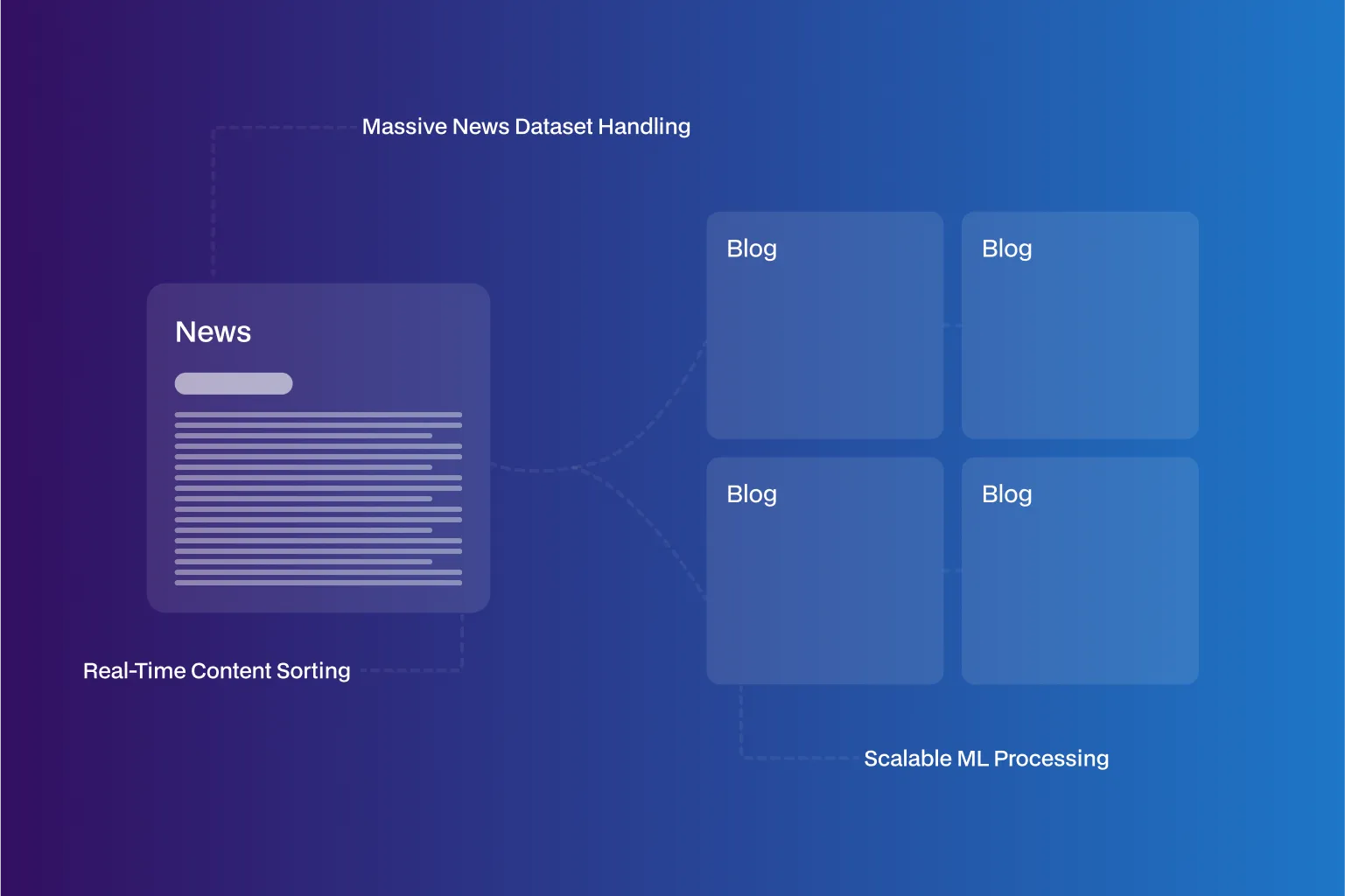

News Based Semantic Segmentation For Categorization

Technologies Used

Infrastructure

Manual Tagging → AI Categorization

Reduced editorial tagging effort by 60–70%

Inconsistent Labels → Semantic NLP Classification

Improved category consistency and accuracy by 30–40%

Unstructured Text → SQL-Ready Data

Accelerated editorial search, filtering, and analytics by 35–45%

USP

- AI-driven semantic segmentation of news articles into relevant categories.

- Handles large-scale datasets with over 300K+ records.

- Supports multilingual and multi-domain categorization (crime, informational, lifestyle, etc.).

- Streamlined data storage into SQL for instant access and scalability.

Problem Statement

Business Problem

Media platforms publishing at scale face a persistent operational challenge:

- Hundreds of thousands of articles required manual categorization and tagging

- Human-led tagging was slow, inconsistent, and costly

- Poor categorization reduced content discoverability, SEO performance, and reuse

- Editorial teams lacked a structured, queryable dataset for analytics and automation

With article volume growing daily, the client needed a fully automated, scalable categorization system that could classify content accurately across multiple domains and languages.

Solution

Solution

NeuraMonks engineered an end-to-end AI-driven semantic segmentation pipeline purpose-built for large-scale news and blog datasets.

What we delivered:

- NLP-based parsing of article titles and full content

- Hugging Face–powered language models trained for multi-domain classification

- Context-aware semantic segmentation to assign accurate categories (crime, lifestyle, informational, etc.)

- Multilingual content handling without rule-based tagging

- Automated storage of results in a structured SQL database for instant querying and downstream workflows

The pipeline transformed unstructured text into editorial-ready, structured intelligence.

Challenges

Challenges Solved

Domain Diversity:

Trained models to generalize across varied writing styles, tones, and subject matter.

Ambiguous Content:

Applied semantic context extraction to handle articles that spanned multiple or unclear categories.

Scale & Performance:

Optimized processing pipelines to handle 300K+ articles reliably and efficiently.

Future Adaptability:

Designed the system to support new categories and editorial rules without re-architecting the pipeline.

Why Neuramonks

Why Choose us

- Outcome-driven AI delivery focused on editorial efficiency and data usability

- Deep pre-GPT era expertise in NLP and large-scale text classification

- Proven experience with Hugging Face–based model training and deployment

- Production-grade pipelines designed for high-volume content processing

- Capability to deploy on-prem or air-gapped AI systems where required

- Strong understanding of media workflows, SEO, and content lifecycle management

Ready to get started?

Create an account and start accepting payments – no contracts or banking details required. Or, contact us to design a custom package for your business.

Empower Your Business with AI

Optimize processes, enhance decisions, drive growth.

Accelerate Innovation Effortlessly

Innovate faster, simplify AI integration seamlessly.