TABLE OF CONTENT

Over four thousand exposed AI agents are broadcasting corporate secrets to the internet right now—and most organizations don't even know they're vulnerable. Security researchers scanning the web with tools like Shodan have identified thousands of instances of autonomous AI assistants with wide-open admin panels, plaintext credentials sitting in unprotected files, and full system access granted without meaningful security controls. These aren't theoretical vulnerabilities in some obscure software—these are production deployments of Clawbot or Moltbot, autonomous AI agents that went viral in January 2026 and immediately became one of the most significant security incidents in the emerging agentic AI ecosystem.

Within seventy-two hours of widespread adoption, security teams at Palo Alto Networks, Tenable, Bitdefender, and independent researchers documented exposed control interfaces, remote code execution vulnerabilities, credential theft through infostealer malware, and a supply chain attack that distributed over four hundred malicious packages disguised as legitimate automation skills. This wasn't a sophisticated zero-day exploit chain—these were fundamental design decisions and deployment misconfigurations creating attack surfaces so large that commodity threat actors could compromise systems with minimal effort.

What makes this particularly concerning for enterprises is that these AI agents aren't just reading data—they're executing commands, managing credentials across dozens of services, and operating with the same privileges as the users who deployed them. When an AI agent gets compromised, attackers don't just steal files. They inherit autonomous access to WhatsApp conversations, Slack workspaces, Gmail accounts, cloud infrastructure APIs, and in some cases, direct shell access to corporate systems. The blast radius from a single compromised AI agent can exceed what most incident response teams are prepared to handle. This is the reality security leaders need to understand before deploying autonomous AI infrastructure in their organizations.

The Architecture That Creates a Perfect Storm for Attackers

Understanding why Clawbot or Moltbot represents such a significant security challenge requires examining the architectural decisions that make these systems both powerful and dangerous. Unlike cloud-based AI assistants that operate within vendor-controlled sandboxes, autonomous AI agents running on local infrastructure combine capabilities that create what security researcher Simon Willison termed the "Lethal Trifecta" for AI systems—and then add a fourth dimension that amplifies every risk:

- Full system access with user-level privileges: These agents run with the same permissions as the user account that launched them, meaning they can execute arbitrary shell commands, read and write files anywhere the user can access, make network requests to any destination without restriction, and interact with system resources including cameras, microphones, and location services. There are no sandboxing mechanisms limiting what actions the AI can take.

- Plaintext credential storage without encryption: Authentication tokens, API keys, session cookies, OAuth tokens, and even two-factor authentication secrets are stored in unencrypted JSON and Markdown files on the local filesystem. Unlike browser password managers that use operating system keychains or SSH keys that support encryption, these credentials are immediately usable by anyone who gains file system access—including commodity infostealer malware like RedLine, Lumma, and Vidar.

- Multi-platform integration creating exponential attack surface: A single compromised AI agent doesn't just expose one communication channel—it provides access to WhatsApp, Telegram, Discord, Slack, Signal, and potentially fifteen or more connected platforms simultaneously. Each integration requires its own authentication credentials, and all of them are stored together in the same unprotected configuration directory.

- No security guardrails by default: The developers made a deliberate design choice to ship without input validation, content filtering, or approval workflows enabled by default. This means untrusted content from messaging platforms, emails, web pages, and third-party integrations flows directly into the AI's decision-making process without policy mediation or security controls.

- Persistent memory retaining context across sessions: The AI maintains conversation history, learned behaviors, and operational context in long-term storage. Malicious instructions don't need to trigger immediate execution—they can be fragmented across multiple innocuous-looking messages, stored in memory, and assembled into exploit chains days or weeks later when conditions align for successful execution.

- Autonomous execution without human oversight: Once configured, these agents operate continuously in the background, making decisions and taking actions without requiring approval for each operation. This autonomy is exactly what makes them valuable for automation, but it also means compromised agents can operate maliciously for extended periods before detection.

This architecture is fundamentally different from traditional applications that operate within defined boundaries. Autonomous AI agents break the security model we've spent two decades building into modern operating systems—they're designed to cross boundaries, integrate systems, and act with user authority. Security researcher Simon Willison identified the "Lethal Trifecta" as the intersection of access to private data, exposure to untrusted content, and ability to communicate externally. Clawbot or Moltbot adds persistent memory as a fourth capability that acts as an accelerant, amplifying every risk in the trifecta and enabling time-shifted exploitation that traditional security controls can't detect.

Stop Planning AI.

Start Profiting From It.

Every day without intelligent automation costs you revenue, market share, and momentum. Get a custom AI roadmap with clear value projections and measurable returns for your business.

Real-World Threat Landscape: Active Exploitation in the Wild

The threats facing AI agent deployments aren't hypothetical future concerns—they're active exploitation campaigns happening right now. Security researchers have documented multiple threat actors targeting these systems with techniques ranging from opportunistic scanning to sophisticated supply chain attacks. Here are the attack vectors currently being exploited in production environments:

- Exposed control interfaces accessible from the internet: Security scans identified over four thousand instances with admin panels reachable from public IP addresses. Of the manually examined deployments, eight had zero authentication protecting full access to run commands and view configuration data. Hundreds more had misconfigurations that reduced but didn't eliminate exposure. These exposed interfaces allow attackers to impersonate operators, inject malicious messages into ongoing conversations, and exfiltrate data through trusted integrations.

- Credential harvesting from plaintext storage files: Attackers who gain filesystem access—whether through exposed control panels, compromised dependencies, or commodity malware—find immediate access to API keys, session tokens, and authentication credentials stored without encryption. Unlike encrypted credential stores that require decryption, these files are immediately usable. A single compromised JSON file can contain authentication for dozens of services simultaneously.

- Prompt injection attacks embedded in trusted messaging: Malicious actors send specially crafted messages through platforms like WhatsApp, Telegram, or email that trick the AI into executing unauthorized commands. Because the agent treats messages from unknown senders with the same trust level as communications from family or colleagues, attack payloads can hide inside forwarded "Good morning" messages or innocent-looking conversation threads.

- Supply chain attacks through malicious automation skills: Between late January and early February, threat actors published over four hundred malicious skills to ClawHub and GitHub, disguised as cryptocurrency trading automation tools. These skills used social engineering to trick users into running commands that installed information-stealing malware on both macOS and Windows systems. One attacker account uploaded dozens of near-identical skills that became some of the most downloaded on the platform.

- Memory poisoning enabling delayed exploitation: Attackers don't need immediate code execution—they can inject malicious instructions into the AI's persistent memory through fragmented, innocuous-seeming inputs. These instructions remain dormant until the agent's internal state, goals, or available tools align to enable execution, creating logic bomb-style attacks that trigger days or weeks after the initial compromise.

- Account hijacking and session impersonation: With access to session credentials and authentication tokens, attackers can fully impersonate legitimate users across all connected platforms. This enables surveillance of private conversations, manipulation of business communications, and execution of actions that appear to come from trusted accounts.

Geographic analysis shows concentrated exposure in the United States, Germany, Singapore, and China, with significant deployments across forty-three countries total. Enterprise security teams face a challenge they're not accustomed to—consumer-grade "prosumer" tools being deployed in corporate environments without IT oversight, creating visibility gaps where neither personal nor corporate security controls effectively monitor what's happening. At Neuramonks, we've worked with organizations deploying Agentic AI systems to implement proper threat modeling and security architectures before these visibility gaps become incident response nightmares.

The Most Critical Vulnerabilities Security Teams Must Address

The vulnerabilities affecting autonomous AI agents map closely to the OWASP Top 10 for Agentic Applications, representing systemic security failures rather than individual bugs. Security teams need to understand that fixing one misconfiguration won't secure these deployments—the entire threat model requires rethinking. Here are the critical vulnerabilities demanding immediate attention:

- Default insecure gateway binding exposing admin interfaces: Out-of-the-box configurations bind the control gateway to 0.0.0.0, making the admin interface accessible from any network interface. This single misconfiguration has led to thousands of exposed instances discoverable through simple internet scans. The gateway handles all authentication, configuration, and command execution—full compromise requires only finding an exposed instance and exploiting weak or missing authentication.

- Missing or inadequate authentication on control panels: Manual testing of exposed instances revealed eight with absolutely no authentication protecting administrative functions. Dozens more had authentication that could be bypassed through common techniques. Without proper authentication, anyone who reaches the control interface gains complete operational control over the AI agent and all its integrated services.

- Plaintext secrets vulnerable to commodity malware: Credentials stored in unencrypted JSON and Markdown files become trivial targets for information-stealing malware. These commodity tools—available for purchase on criminal forums for negligible cost—automatically scan for known credential storage locations and exfiltrate everything they find. No sophisticated attack techniques are required when secrets sit in plaintext.

- Indirect prompt injection through untrusted content sources: The AI can read emails, chat messages, web pages, and documents without validating source trustworthiness. Malicious actors craft content that manipulates the AI's behavior when processed, executing unauthorized commands like data exfiltration, file deletion, or malicious message sending—all appearing as legitimate agent actions.

- Unvetted supply chain in skills marketplace: The ClawHub registry that distributes community-created skills has no security review process before publication. Developers can upload arbitrary code disguised as useful automation, and users install these skills trusting that popular downloads indicate safety. The platform maintainer has publicly stated the registry cannot be secured under the current model.

- Excessive agency without governance frameworks: These agents have broad capabilities but lack corresponding governance controls defining what actions require approval, which data sources are trusted, and when to escalate decisions to humans. The absence of policy mediation means every capability is available for exploitation once an attacker compromises the agent.

- Cross-platform credential exposure amplifying breach impact: Compromising a single AI agent doesn't just expose one service—it provides access to every platform the agent connects to. One successful attack yields credentials for WhatsApp, Telegram, Discord, Slack, Gmail, cloud APIs, and potentially integration with workflow automation tools like n8n, multiplying the attacker's reach across the victim's entire digital footprint.

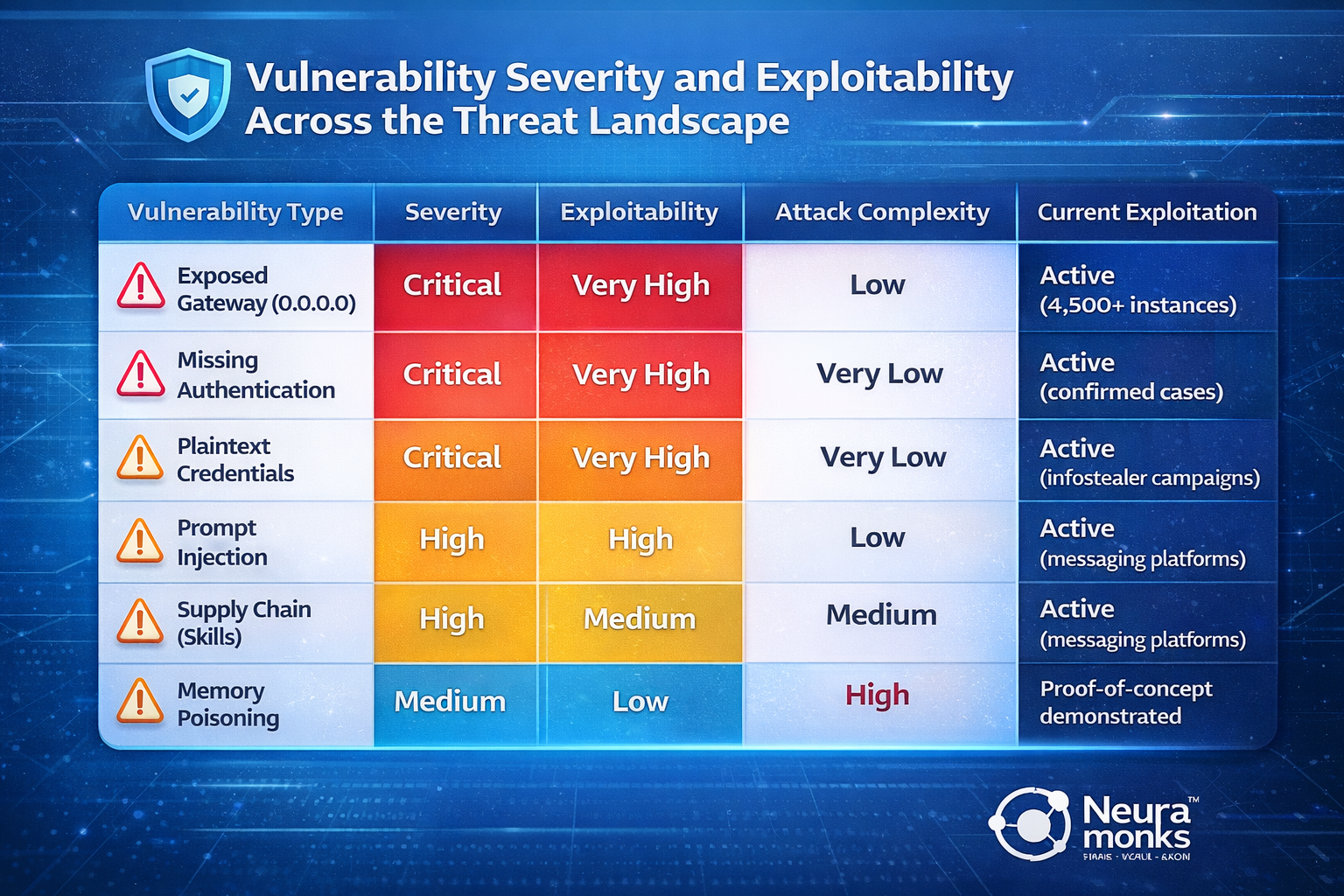

Here's how vulnerability severity and exploitability compare across the threat landscape:

Enterprises exploring AI solutions for automation and productivity need to recognize that these aren't traditional security vulnerabilities with patches on the way—they're architectural characteristics of autonomous agents that require fundamentally different security approaches. Organizations like Neuramonks that specialize in enterprise AI deployments implement security controls at the architecture level rather than trying to retrofit protection onto inherently insecure designs.

Why Traditional Security Controls Fail Against Autonomous AI Agents

Security teams trained on protecting web applications, databases, and traditional enterprise software find themselves unprepared for the challenges autonomous AI agents present. The security model we've built over twenty years of modern computing doesn't translate effectively to systems that are designed to break boundaries and cross security domains. Here's why conventional controls fail:

- AI agents break defined operational boundaries by design: Traditional applications operate within clearly defined scopes—a web server processes HTTP requests, a database manages data queries, a file sync tool moves files between locations. Autonomous AI agents explicitly reject these boundaries, integrating across systems, interpreting ambiguous natural language commands, and making contextual decisions about what actions to take. You can't sandbox something whose entire purpose is escaping sandboxes.

- Static application security testing can't catch dynamic reasoning-driven risks: SAST tools analyze code for known vulnerability patterns—SQL injection, XSS, buffer overflows, hardcoded secrets. But AI agent vulnerabilities emerge from the agent's reasoning process, not from code patterns. How do you write a static rule that detects when an AI might be persuaded through clever prompting to exfiltrate data? The attack surface is in the model's decision-making, not in exploitable code paths.

- Autonomous decision-making bypasses approval workflows: Traditional security controls often rely on human checkpoints—code review before deployment, approval workflows for sensitive operations, manual verification of critical actions. Autonomous agents are specifically designed to operate without these checkpoints. Reintroducing human approval for every action defeats the entire purpose of automation, but removing it creates operational risk most organizations aren't prepared to accept.

- Persistent memory creates delayed multi-turn attack chains: Traditional security monitoring looks for patterns indicating compromise—unusual network connections, unexpected file access, suspicious command execution. But when malicious instructions can be inserted into memory weeks before they trigger execution, traditional indicators of compromise appear disconnected from the initial breach. The attack timeline becomes too distributed for conventional correlation.

- Trust assumptions in messaging platforms fail spectacularly: Security controls in email systems and collaboration platforms assume humans will exercise judgment about message trustworthiness. Phishing awareness training teaches employees to question suspicious messages. But when an AI processes these messages automatically, applying the same trust level to forwarded messages from strangers as to messages from family members, all that human judgment gets bypassed completely.

- Integration amplifies rather than contains impact: Traditional security architecture uses segmentation to limit breach impact—if one system gets compromised, the blast radius stays contained. But AI agents integrate across platforms and services specifically to provide unified capabilities. Compromise doesn't stay contained—it spreads across every connected system, with the agent's own legitimate access providing the perfect cover for malicious activity.

This isn't a criticism of autonomous AI agents—it's a recognition that they represent a fundamentally different security paradigm. The companies succeeding with these deployments aren't the ones trying to apply traditional controls harder. They're the ones rethinking security architecture from first principles, designing governance frameworks that preserve autonomy while limiting catastrophic failure modes, and building monitoring that detects reasoning-driven threats rather than just looking for known attack patterns.

Enterprise Defense Framework: Securing AI Agents Without Killing Functionality

Securing autonomous AI agents requires a systematic approach that balances protection against exploitation with preserving the capabilities that make these systems valuable. Here's the defense framework security teams should implement for any AI agent deployment:

- Immediate actions for existing deployments: Conduct an audit of all AI agent instances running in your environment—including shadow IT deployments on employee devices. Identify exposed instances using network scans, verify authentication is properly configured, immediately revoke any credentials that might have been exposed, isolate compromised or misconfigured systems from production networks until they can be hardened, and document what data and systems each agent has accessed.

- Configuration hardening to eliminate low-hanging vulnerabilities: Change gateway binding from 0.0.0.0 to loopback (127.0.0.1) to prevent direct internet exposure. Enable and enforce strong authentication on all control interfaces using multi-factor authentication where possible. Migrate credential storage from plaintext files to encrypted vaults or operating system keychains. Disable unnecessary integrations and services to reduce attack surface. Configure the agent to require explicit approval for sensitive operations like external communication, file deletion, or executing administrative commands.

- Network segmentation restricting access to trusted paths only: Never expose AI agent control interfaces directly to the public internet. Implement VPN or Tailscale for remote access rather than port forwarding. Use firewall rules to explicitly allowlist necessary connections and block everything else. Segment AI agent infrastructure from production systems unless integration is absolutely required. Monitor and log all network connections the agent makes, alerting on unexpected destinations.

- Comprehensive monitoring and detection covering agent-specific threats: Set up alerts for exposed ports and unauthenticated access attempts to AI agent control interfaces. Monitor the agent process for unexpected command execution patterns, particularly shell commands accessing sensitive directories or making network connections to unknown domains. Deploy endpoint detection and response tools specifically configured to detect information-stealing malware targeting AI agent credential stores. Track and validate the integrity of configuration files, detecting unauthorized modifications that might indicate compromise or memory poisoning.

- Supply chain validation before installing third-party capabilities: Never install skills or extensions from untrusted sources without thorough review. Examine the code manually for suspicious operations like credential exfiltration, unexpected network requests, or system modification commands. Check the developer's reputation, looking for established history rather than newly created accounts. Monitor for typosquatting and lookalike skills designed to impersonate legitimate tools. Consider maintaining an internal vetted skills library rather than allowing arbitrary public installations.

- Least-privilege implementation limiting damage from compromise: Grant AI agents only the minimum permissions necessary for their specific tasks—file system access only to designated directories, shell command execution only for approved commands through allowlists, network access only to explicitly required services. Implement role-based access control so different automation tasks run with different privilege levels. Require human approval workflows for any operation that could cause significant business impact—financial transactions, data deletion, external communications to customers or partners, or modifications to production systems.

- Incident response planning specific to AI agent compromise: Define clear procedures for responding to compromised AI agents—immediate isolation steps, credential revocation processes, forensic data collection requirements. Establish who has authority to shut down agent operations if compromise is suspected. Document all systems and data the agent has access to so incident scope can be quickly assessed. Plan communication protocols for notifying affected users or external parties if the agent's connected accounts are used maliciously. Test these procedures regularly rather than discovering gaps during an actual incident.

The goal isn't to make autonomous AI agents completely risk-free—that's impossible for systems designed to operate with broad authority across organizational boundaries. The goal is reducing risk to acceptable levels while preserving the capabilities that make these systems valuable for automation and productivity. Organizations that implement this framework thoughtfully can deploy AI agents that deliver business value without creating security nightmares that keep CISOs awake at night.

For enterprises that need professional security architecture for AI agent deployments, Neuramonks provides comprehensive consulting services covering threat modeling, security design, governance frameworks, and implementation of defense-in-depth controls specifically tailored to autonomous AI systems. We've helped organizations across industries deploy AI infrastructure that satisfies security teams, passes compliance audits, and delivers reliable automation without creating unacceptable risk.

Strategic Perspective: The Future of AI Agent Security

Clawbot or Moltbot represents both a warning and an opportunity. The warning is clear—autonomous AI agents deployed without proper security architecture create catastrophic risks that traditional controls can't adequately mitigate. The rapid exploitation following viral adoption demonstrates that threat actors are ready and able to capitalize on these vulnerabilities at scale. Organizations treating AI agent deployment as a simple software installation rather than a fundamental change in their security model will face consequences.

Autonomous AI agents can transform operations, but success depends on treating them as critical infrastructure from the start. Secure deployments rely on basics—least-privilege access, encrypted credentials, restricted interfaces, approvals for sensitive actions, and vetted code. AI security isn’t optional; it’s what turns automation into long-term value instead of a short-lived experiment. Design with threat modeling, build controls into the architecture, and govern autonomy without losing control.

This is just the beginning of the agentic era. The security challenges we're seeing with autonomous AI agents will only grow more complex as these systems become more capable and more deeply integrated into business operations. Organizations that invest now in understanding these threats and building proper defenses will have significant competitive advantages over those playing catch-up after their first major breach.

Ready to secure your AI infrastructure before the next breach? The threats facing autonomous AI agents aren't going away—they're accelerating as adoption grows. Neuramonks helps enterprises deploy AI agents with the security architecture, governance frameworks, and monitoring capabilities that keep both productivity and protection intact.

Our team has built security-first AI deployments for organizations that can't afford to treat autonomous agents as experiments. We handle the complexity—threat modeling, configuration hardening, permission frameworks, supply chain validation, and incident response planning—so you get AI infrastructure that passes security audits and delivers business value.

Schedule a security consultation with Neuramonks to assess your AI agent risk exposure, or contact our team to discuss enterprise-grade deployment strategies that your CISO will actually approve. Because the difference between AI that transforms operations and AI that creates incidents is how seriously you take security from day one